Photo by editor

# Introduction

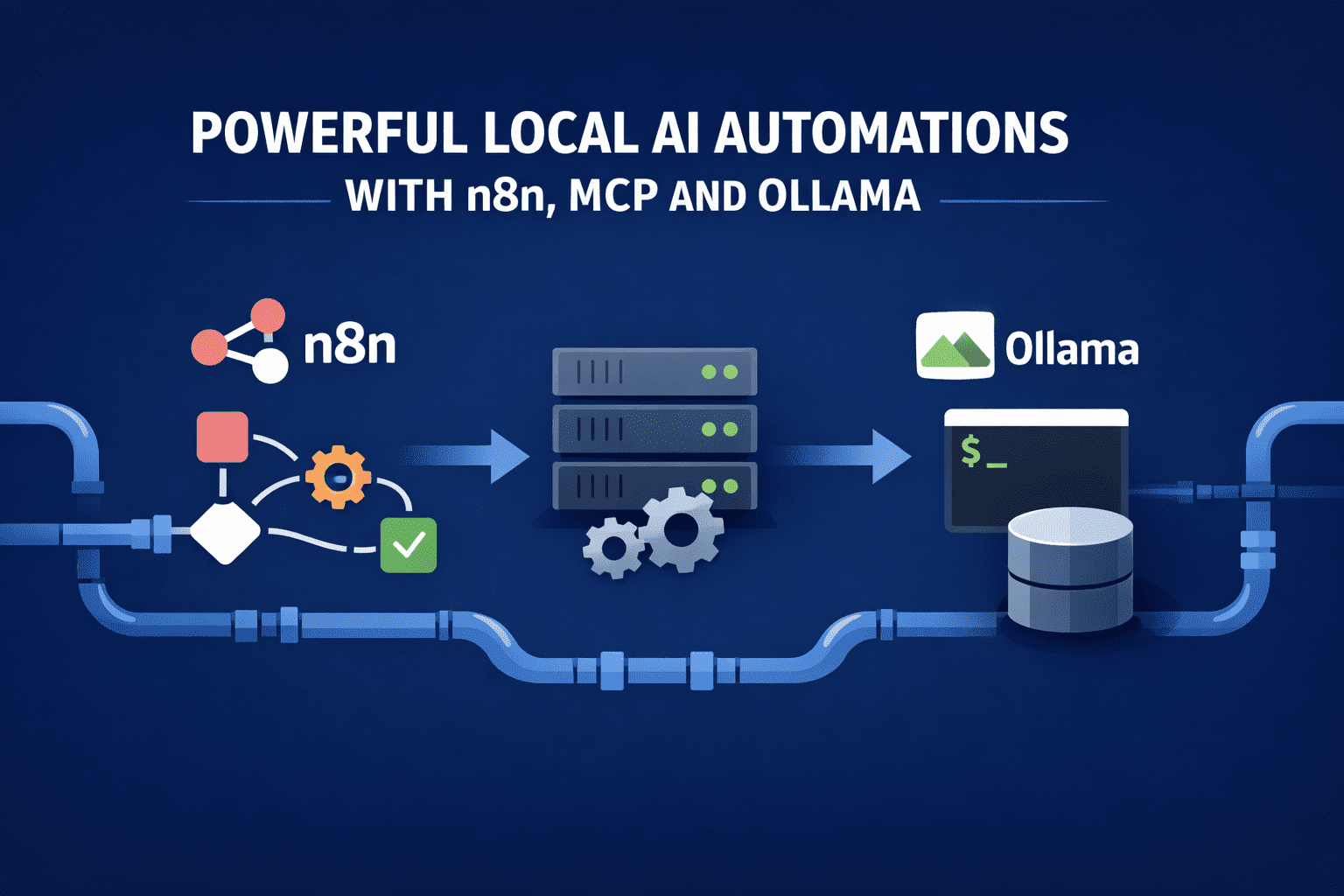

Running large local language models (LLMs) locally only makes a difference if they are doing real work. The value of N8N, Model context protocol (MCP), and Olma Not the architectural beauty, but the ability to automate tasks that would require engineers in the loop.

This stack works when each component has a concrete responsibility: N8N orchestrates, restricts the use of the MCP tool, and ulama causes on local data.

The ultimate goal is to run these automations on a single workstation or small server, taking the place of fragile scripts and expensive API-based systems.

# Automatic log triage with root cause inference generation

This starts the automation N8N Request to local directory or Kafka user every five minutes. N8N performs deterministic preprocessing: grouping by service, iteratively truncating stack traces, and extracting timestamps and error codes. Only condensed log bundles are transferred to Olama.

The spatial model provides a tightly scoped cue to query cluster failures, identify the first causative event, and generate two to three plausible root cause inferences. MCP exposed the same tool: query_recent_deployments. When the model requests it, N8N executes the query against the deployment database and returns the result. The model then updates its assumptions and outputs structured JSON.

N8N stores the output, posts a summary to an internal Slack channel, and opens a ticket when confidence exceeds some threshold. There is no cloud LLM involved, and the model never sees raw logs without preprocessing.

# Continuous data quality monitoring for analytics pipelines

N8N looks at incoming batch tables in the local warehouse and differentiates the schema against historical baselines. When a flow is detected, the workflow sends a compact description of the change to Olama instead of the full dataset.

The model is instructed to determine whether the drift is benign, suspicious, or disruptive. MCP exposes two tools: Sample_row And compute_column_stats. The model selectively invokes these tools, inspects the returned values, and generates classifications with human-readable descriptions.

If a drift is classified as breaking, N8N automatically stops downstream pipelines and interprets the event with model logic. Over time, teams accumulate a searchable archive of past schema changes and decisions, all generated locally.

# Autonomous dataset labeling and validation loops for machine learning pipelines

It is designed for automation teams training models on constantly arriving data where manual labeling becomes a bottleneck. N8N monitors a local data drop location or database table and batches new, unlabeled records at fixed intervals.

Each batch is preprocessed with a commitment to remove outliers, normalize fields, and attach minimal metadata.

Ulama receives only the cleaned batch and is instructed to generate labels with confidence scores, not free text. MCP exposes a constraint toolset so that models can validate their results against historical distributions and sampling checks before accepting anything. N8N then decides whether the labels are automatically approved, partially approved, or sent to humans.

Key components of a loop:

- Initial label generation: The local model strictly assigns labels and trust values based on the provided schema and instances, producing structured JSON that N8N can validate without any annotations.

- Statistical Enhanced Validation: With the MCP tool, the model requests label distribution statistics from previous batches and Flag deviations that suggest concept overgrowth or misclassification.

- Increase in low confidence: N8N automatically routes samples below a confidence threshold to human reviewers while accepting the rest, keeping throughput high without sacrificing accuracy.

- Feedback re-injection: Human corrections are fed back into the system as new reference instances, which the model can retrieve via MCP in the future.

This creates a closed-loop labeling system that scales locally, improves over time, and removes humans from the critical path unless they are truly needed.

# Self-updating research briefs from internal and external sources

This automation runs on a nightly schedule. N8N pulls new commits from a curated set of selected repositories, recent internal documents, and archived articles. Each item is fragmented and embedded locally.

Ulama, Whether run via Terminal or GUIrather than creating a new one, a brief update of existing research is indicated. MCP exposes retrieval tools that allow the model to be queried from prior summaries and embeddings. The model identifies what has changed, rewrites only the affected parts, and flags inconsistencies or obsolete assertions.

N8N returns the latest short to a repository and logs a difference. The result is a living document produced without manual rewriting, driven entirely by local evaluation.

# Automated event autopsies with evidence linking

When an event closes, N8N collects alerts, logs, and timelines from deployment events. Instead of asking a model to blindly write a narrative, the workflow feeds the timeline in rigid historical blocks.

The model is instructed to produce an autopsy with clear references to timeline events. MCP Exposed a fetch_event_details Tool The model can call when the context is missing. Each paragraph in the final report refers to a concrete evidence ID.

N8N rejects any output that lacks references and reproduces the model. The final document is consistent, expressible, and generated without externally exposing operational data.

# Local contract and policy review automation

Legal and compliance teams run this automation on internal machines. N8N prepares new contract drafts and policy updates, strips formatting, and class clauses.

The ulama are asked to compare each clause against an approved baseline and flag deviation. MCP exposed a retrieve_standard_clause Toolallows the model to draw a canonical language. Output includes exact clause references, risk levels, and recommended revisions.

N8N finds high-risk human reviewers and auto-approves for unmodified parts. Sensitive documents never leave the local environment.

# Code review using tools for internal repositories

This workflow is triggered on pull requests. The N8N does the differentiation and test results, then sends them to Ulama Only with instructions to focus on logic changes and potential failure modes.

Through MCP, the model can call run_static_analysis And query_test_failures. It uses these results to boost its review comments. N8N posts comments only when the model identifies concrete, reproducible issues.

The result is a code reviewer that doesn’t betray style opinions and only comments when the evidence supports the claim.

# Final thoughts

Each example limits the scope of the model, exposes only the necessary tools, and relies on N8N for implementation. Native Indicator is fast enough to run these workflows fairly quickly and cheap enough to keep running forever. More importantly, it keeps reasoning close to data and execution under strict control – where it belongs.

This is where N8N, MCP, and Olama stop being infrastructure experiments — and start acting as functional automation stacks.

Nehla Davis is a software developer and tech writer. Before devoting his career full-time to technical writing, he managed, among other interesting things, to work as a lead programmer at an Inc. 5,000 experiential branding organization whose clients included Samsung, Time Warner, Netflix, and Sony.