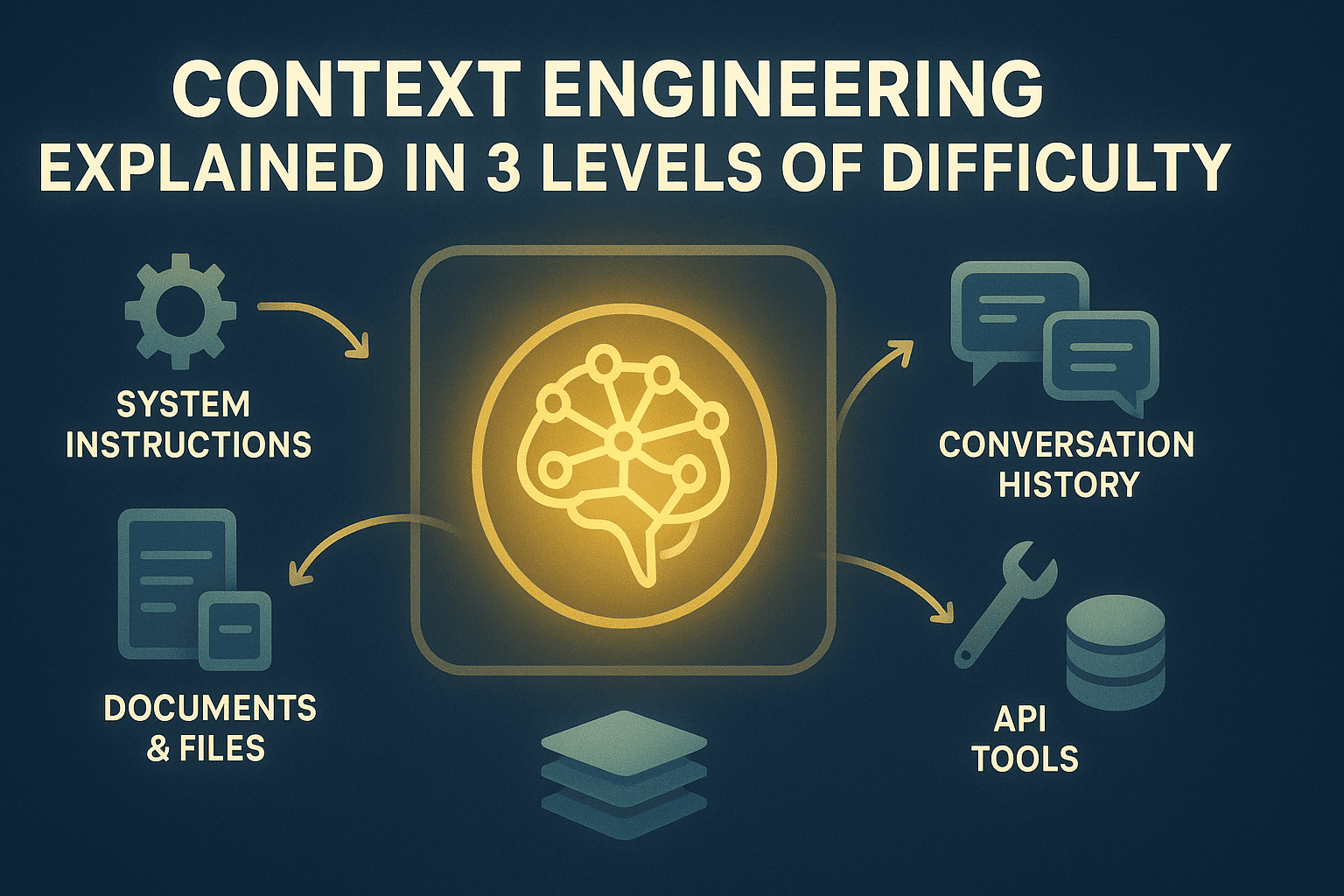

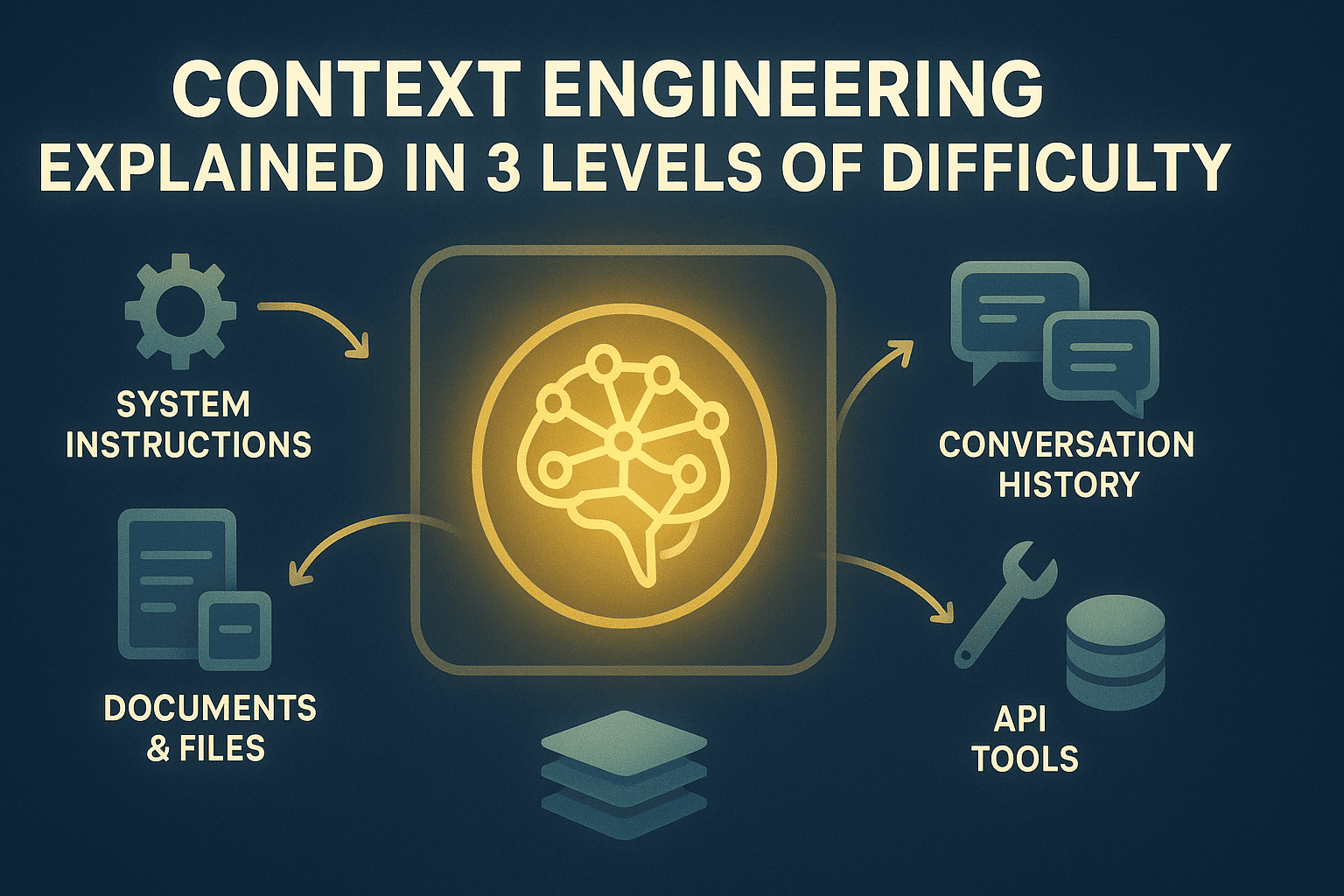

Context engineering explained in 3 levels of difficulty Image by author

# Introduction

Large language model (LLM) applications consistently target context window boundaries. The model forgets prior instructions, loses track of relevant information, or degrades quality along interactions. This is because the LLM has fixed token budgets, but the requests generate unsolicited information. Without management, important information is randomly disconnected or never entered into context.

Context Engineering treats the context window as a managed resource With clear allocation policies and memory systems. You decide what information enters the context, when it enters, how long it stays, and what is compressed or archived on external memory for retrieval. It orchestrates the flow of information at application runtime rather than hoping everything fits or accepting degraded performance.

This article describes context engineering at three levels.

- Understanding the basic need for context engineering

- Implementing process improvement strategies in production systems

- Reviewing modern memory architectures, retrieval systems, and optimization techniques

The following sections explore these levels in detail.

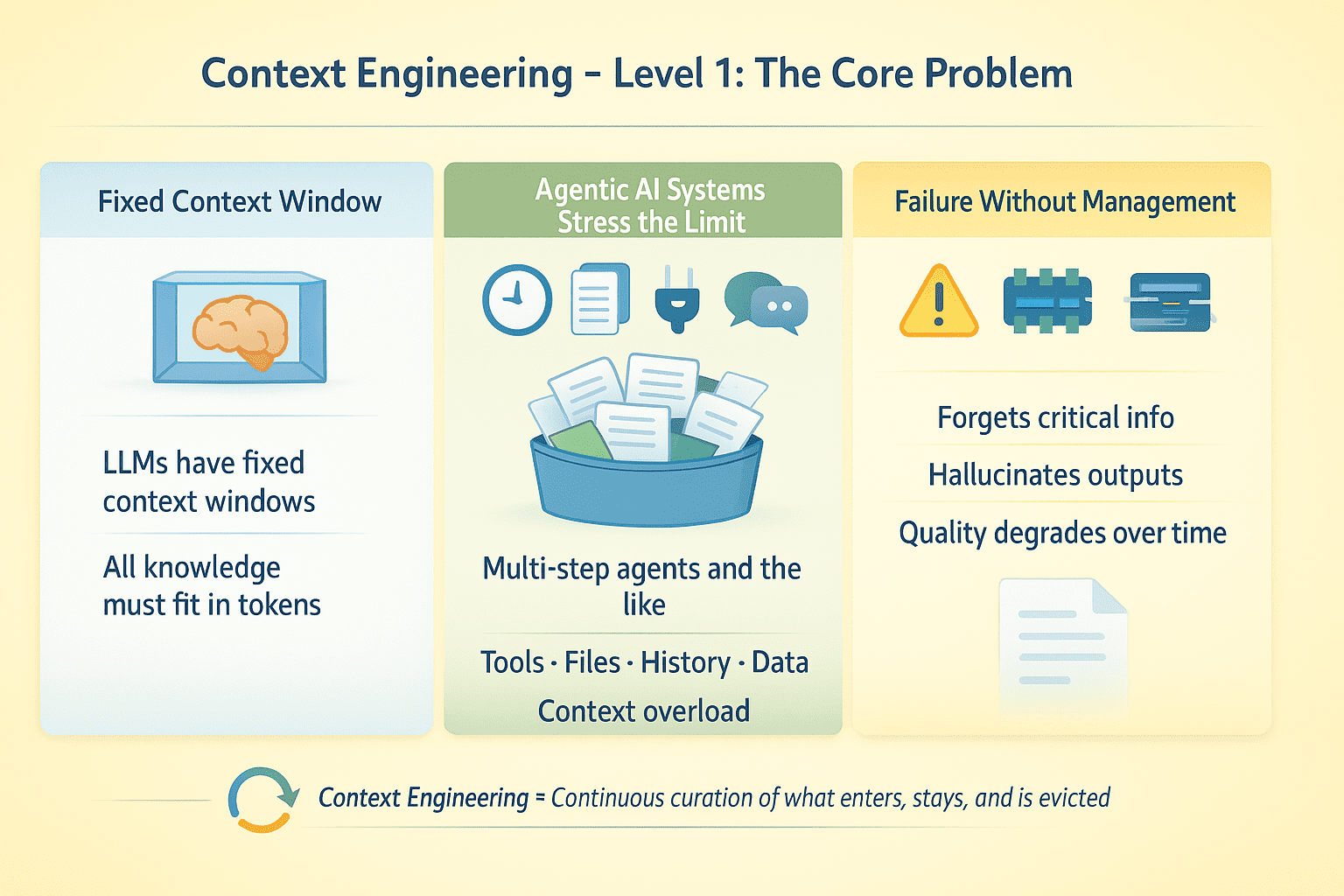

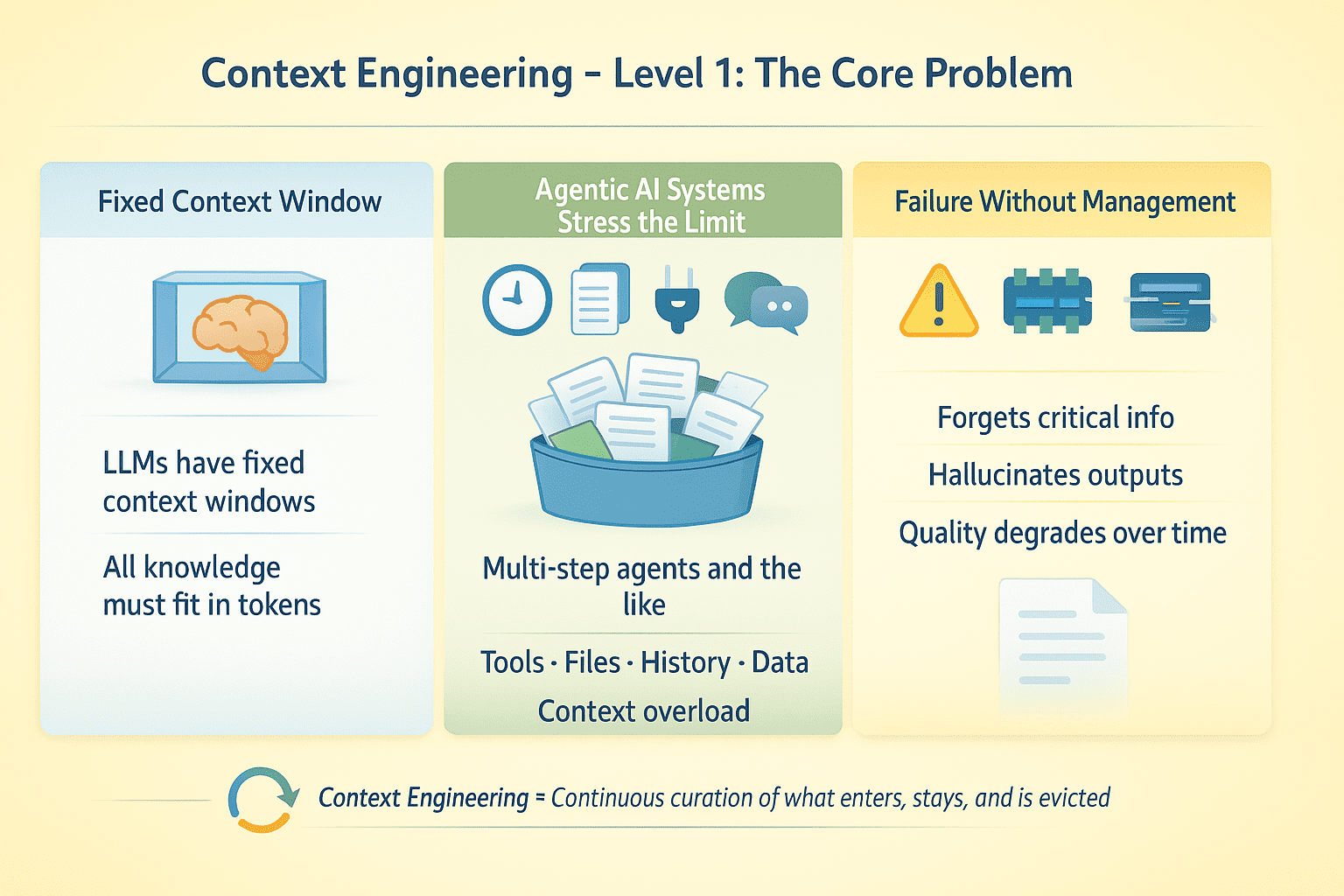

# Level 1: Understanding the Context Constraint

LLM has fixed windows of context. Everything the model knows must fit into these tokens. This is not much of a problem in single turn completion. For retrieval-over-generation (RAG) applications and AI agents that run multitasking tasks with tool calls, file uploads, conversation history, and external data, this poses an optimization problem: What information gets attention and what gets lost?

Say you have an agent that runs for multiple steps, makes 50 API calls, and processes 10 documents. Such an agentic AI system will clearly fail without context management. The model misses critical information, falsifies tool output, or degrades in quality as the conversation expands.

Context Engineering Level 1 | Photo by author

Context engineering is about designing The continuous requirement of an information environment around the LLM throughout its process. It also includes managing what is entered into the context, when, for how long, and what is evicted when space is exhausted.

# Level 2: Improving context in practice

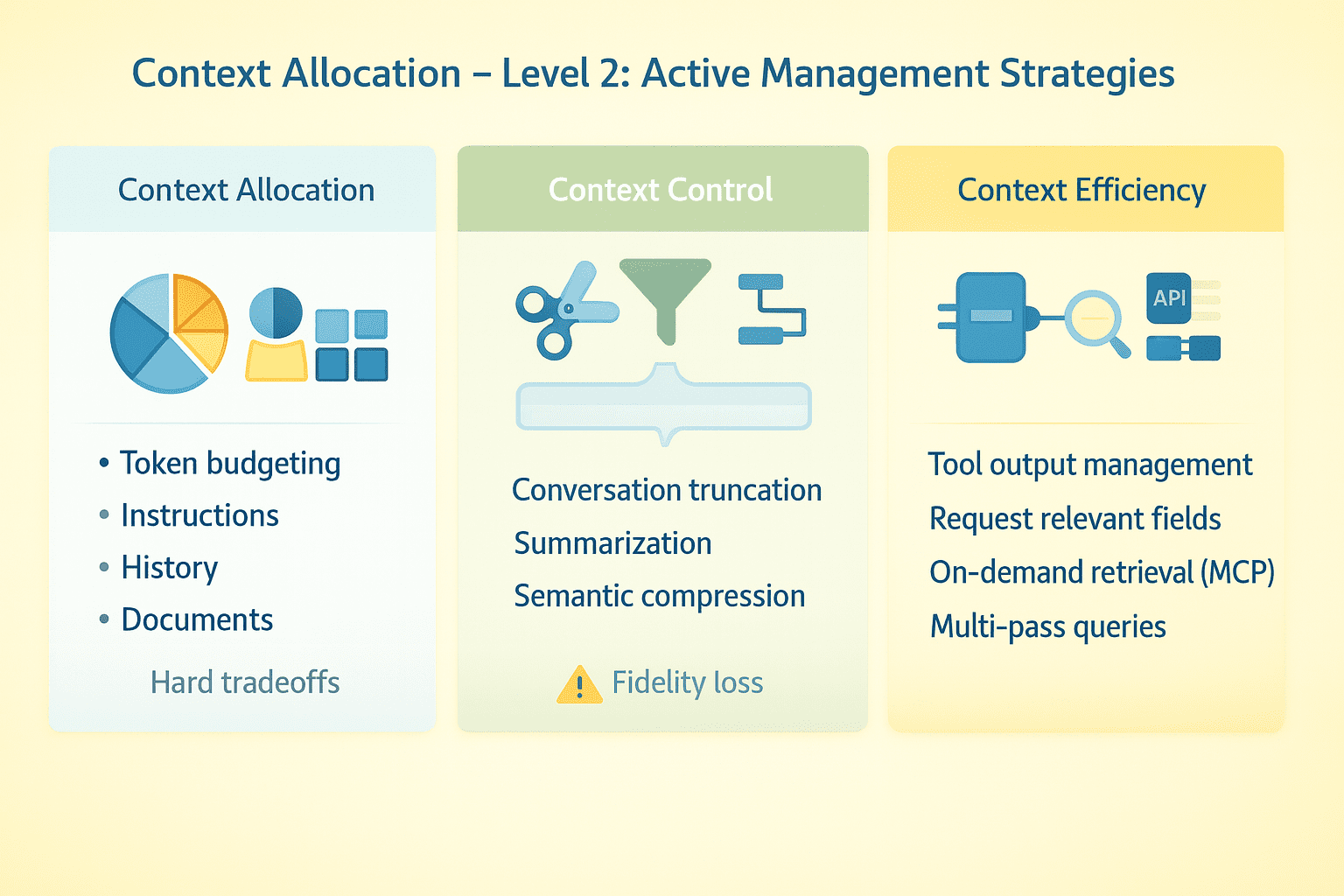

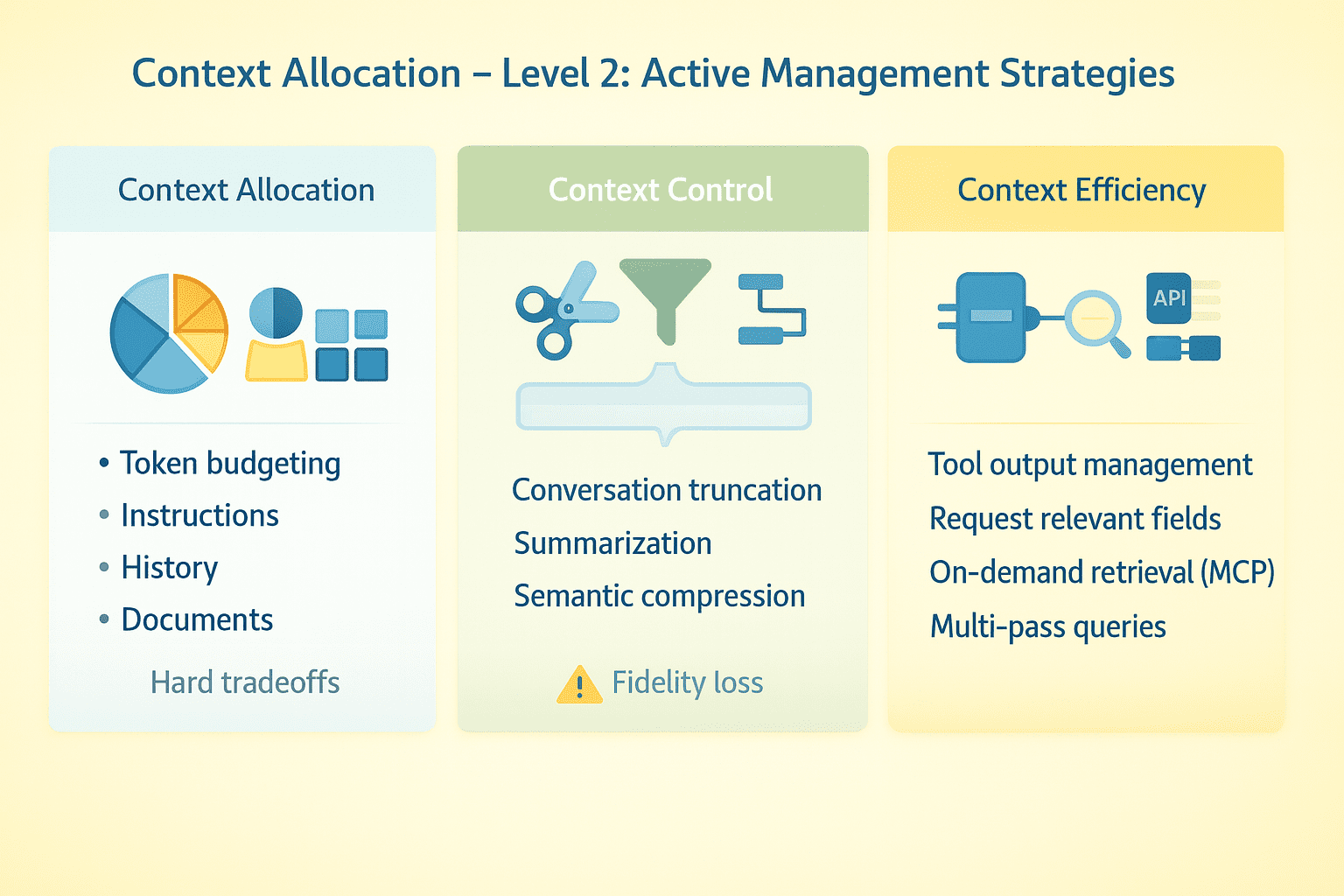

Effective context engineering requires a clear strategy in several dimensions.

// Budgeting token

Deliberately allocate your context window. System instructions can take up to 2K tokens. Conversation history, tool schemas, retrieved documents, and real-time data can all grow exponentially. With a large contextual window, there’s plenty of headroom. With a very small window, you’re forced to make tough trade-offs about what to keep and what to leave.

// Trimming conversation

Keep the current turn, drop the middle turn, and preserve the initial context. Abstract works but loses fidelity. Some implement systems Semantic compression – Eliciting key facts rather than preserving verbatim text. As well as checking your agent breaks down where your agent breaks down.

// Managing Tool Outputs

Larger API responses consume tokens faster. Request specific fields instead of the full payload, summarize them before returning to the model, or use a multipass strategy where the agent first gets the metadata then requests only the details of the relevant objects.

// Using model context protocols and on-demand retrieval

Instead of loading everything up front, connect the model to external data sources and query it when needed. Model context protocol (MCP) Agent decides what to bring based on task requirements. This changes the problem from “fitting everything into context” to “getting the right things at the right time”.

// Separation of structural states

Place static instructions in system messages. Place variable data in user messages where it can be updated or removed without touching the underlying directive. Treat conversation history, tool results, and retrieved documents as separate streams with independent management policies.

Context Engineering Level 2 | Photo by author

The practical shift here is to understand the context as one Dynamic resources that require active management at runtime by an agentnot a static thing you create once.

# Level 3: Implementing context engineering in production

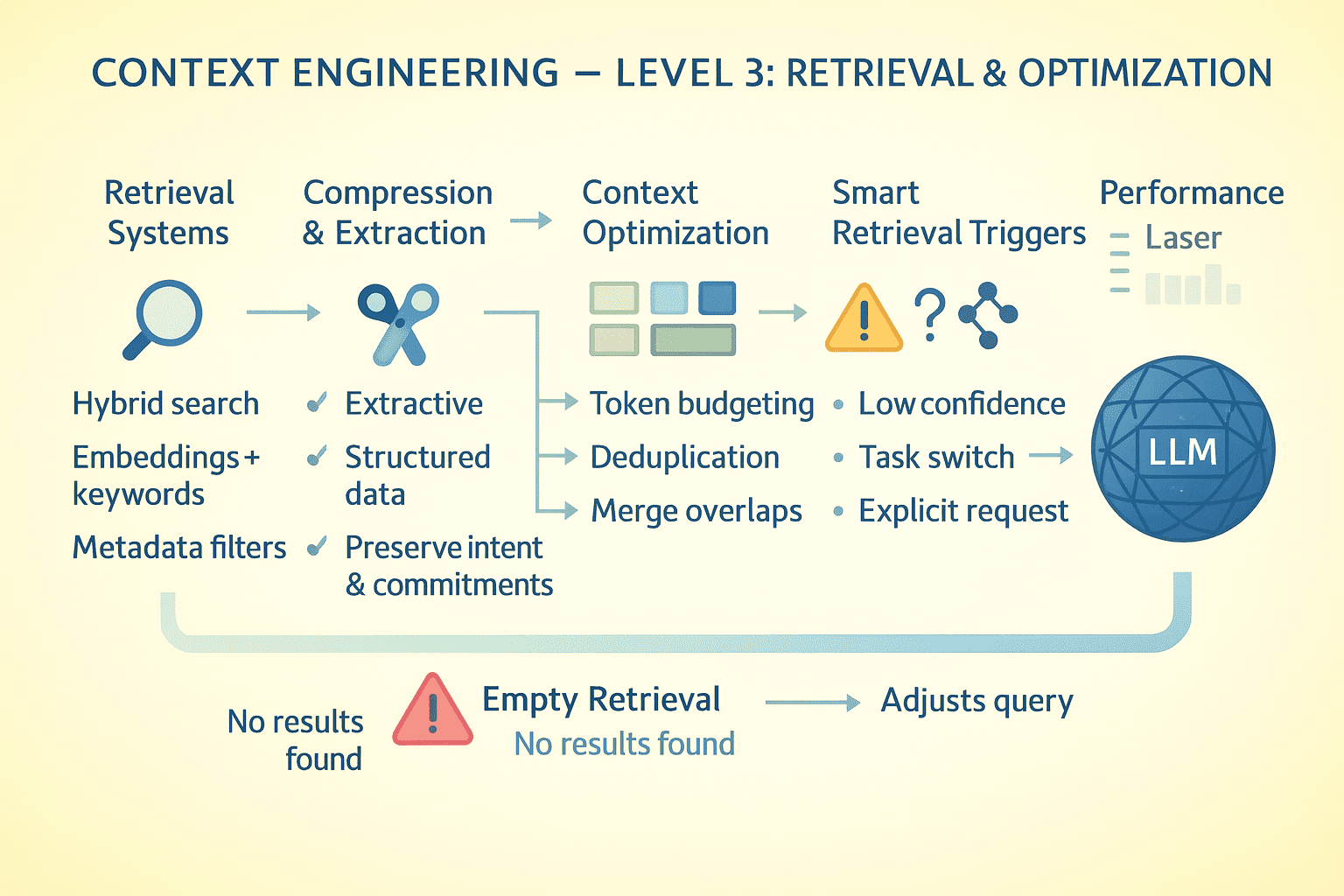

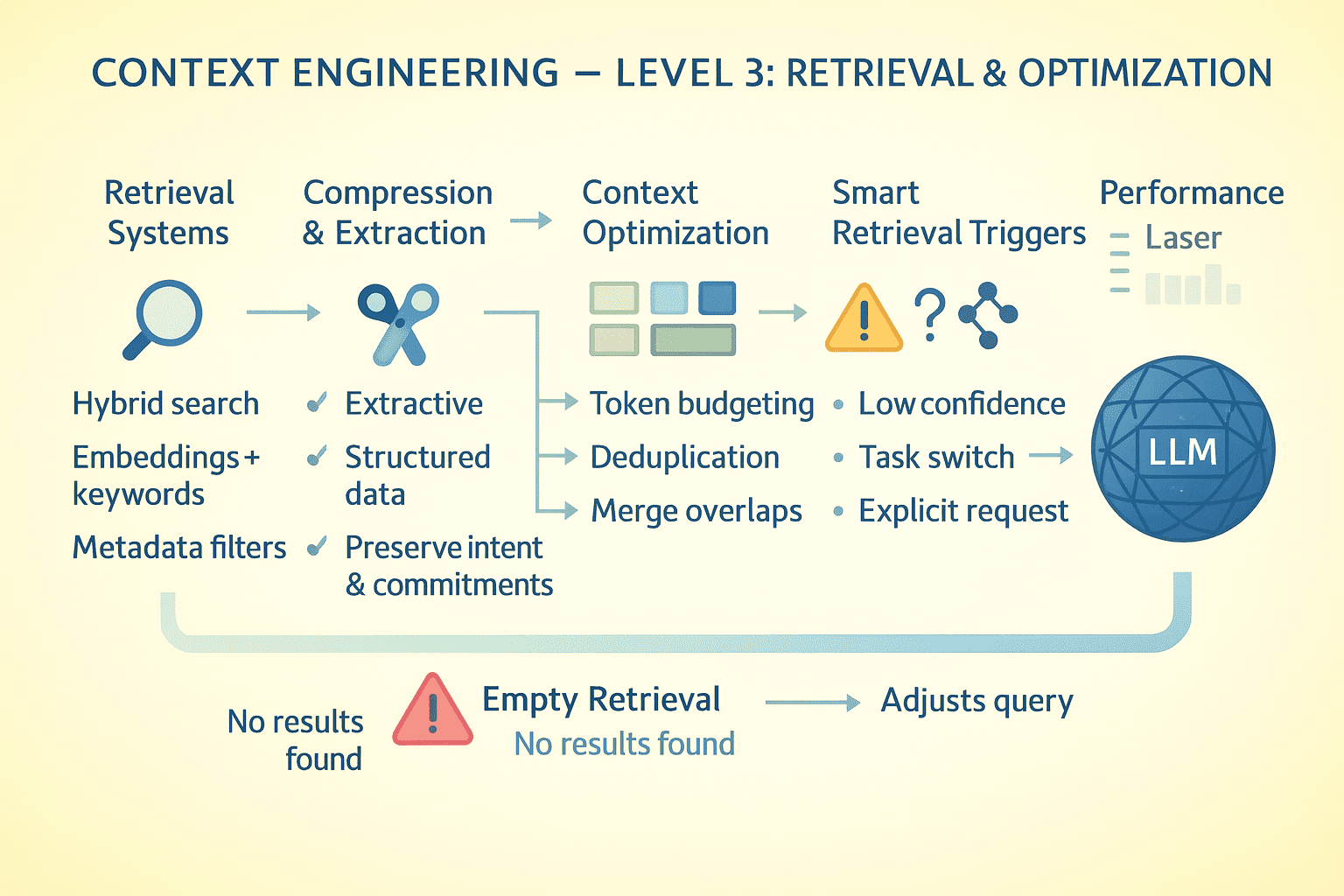

Context engineering at scale requires sophisticated memory architectures, compression strategies, and retrieval systems working in concert. Here’s how to build a production-grade implementation.

// Designing memory architecture patterns

separately Memory in agentic AI systems In grade:

- Working memory (active context window)

- Episodic memory (compressed conversation history and task state)

- Semantic memory (facts, documents, knowledge base)

- Procedural memory (instructions)

Working memory is what the model sees now, which has to be optimized for immediate task needs. What is Episodic Memory Stores? You can compress aggressively but preserve temporal relationships and causal chains. For semantic memory, store indexes by topic, entity, and relevance for fast retrieval.

// Application of compression techniques

A naive summary misses important details. A better approach is extractive compression, where you identify and preserve sentences of high information density while discarding the filler.

- For tool outputs, extract structured data (entities, matrices, relations) instead of process summaries.

- For read interactions, preserve user intentions and agent commitments while compressing reasoning chains.

// Designing a retrieval system

When the model requires out-of-context information, the retrieval criteria determine success. Implement hybrid search: Dense embedding for semantic matching, BM25 for keyword matchingand metadata filters for health.

Results by results, relevance, and information density. Top Loot K but also near the surface of Mrs. ; The model should know what is the closest match. Retrieval takes place in context, so the model sees the query formulation and results. Bad questions produce bad results. Uncover this to enable self-correction.

// Optimizing at the token level

Consistently profile your token usage.

- System instructions using 5K tokens that can be 1K? Rewrite them.

- Tool schemas verbose? Use compact

JSONSchemes rather than wholesOpenAPIEyeglasses - Conversation turns to repeating similar content? Deduplicate.

- Recovered overlapping documents? Merge before adding context.

Each token saved has a token task available for critical information.

// Dynamic memory retrieval

The model should not be constantly retrieved. This is expensive and adds delay. Implement smart triggers: retrieve when the model explicitly requests information, when detecting knowledge gaps, when task switches occur, or when users refer to past context.

When retrieval does not return anything useful, the model should be explicitly aware of this rather than hallucinating. Return empty results with metadata: “No documents were found matching the query X In the knowledge base Y“This allows the model to adjust the strategy by optimizing the query, searching for a different source, or notifying the user.

Context Engineering Level 3 | Photo by author

// Synthesizing information from multiple documents

When reasoning requires multiple sources, proceed hierarchically.

- First pass: extract key facts from each document independently (parallel).

- Second Pass: Contextualize and synthesize the extracted facts.

This avoids context depletion by loading 10 full documents while preserving multi-source reasoning capability. For inconsistent sources, preserve the contrast. Let the model see conflicting information and either resolve it or flag it for the user’s attention.

// Maintain conversational state

For agents that stop and resume, serialize the context state to external storage. Save compressed conversation history, current task graphs, tool outputs, and recovery cache. On restart, reconfigure the minimum necessary context; Don’t reload everything.

// Assessing and measuring performance

Track key metrics to understand how your context engineering strategy is performing. Monitor context usage to see the average percentage of the window used, and eviction frequency to understand how often you’re hitting context limits. Measure retrieval accuracy by checking what portion of the retrieved documents is actually relevant and usable. Finally, track the persistence of information to see how many milestones survive before being lost.

# wrap up

Context engineering is ultimately about information architecture. You’re creating a system where the model has access to everything in its context window and doesn’t have access to everything that isn’t. Every design decision—what to compress, what to retrieve, what to cache, and what to discard—creates the information environment in which your application runs.

If you don’t pay attention to context engineering, your system can be deceptive, forget important details, or break down over time. Get it right and you get an LLM application that remains integrated, reliable and effective in complex, extended interactions despite its basic architectural limitations.

Happy context engineering!

# Referrals and further education

Bala Priya c is a developer and technical writer from India. She loves working at the intersection of mathematics, programming, data science, and content creation. His areas of interest and expertise include devops, data science, and natural language processing. She enjoys reading, writing, coding and coffee! Currently, she is working on learning and sharing her knowledge with the developer community by authoring tutorials, how-to guides, opinion pieces and more. Bala also engages resource reviews and coding lessons.