We all know the story of the first YouTube video, a 19 -second clip of the zoo’s founder Jab Karim, who commented on the elephants behind him. This video was an important moment in the digital space, and in some ways, it is a reflection, or at least one opposite mirror, when we digest the arrival of VEO 3.

A portion of Google Gemini, VEO 3 was unveiled in Google I/O 2025 and is the first generative video platform that can produce a video with a single indication, with synchronizing dialogue, sound effects and background noise. After you enter your gesture, most of these 8 seconds clips reaches less than 5 minutes.

I’ve been playing with V3 for a couple of days, and my latest challenge l i, I tried to get back to the beginning of the social video and that YouTube “Me at the zoo” The moment, in particular, I wondered if VEO 3 could make this video again.

As I wrote, the key to good VEO 3 is the key to the results. Without detail and structure, VEO 3 has a tendency to choose for you, and you usually do not have custom finished. For this experience, I wondered how I could explain all the details I wanted to draw from this short video and bring them to VEO 3 in the form of a signal. So, naturally, I turned to another AI.

Google Gemini 2.5 Pro is currently unable to analyze any URL, but Google AI mode, which is a new look for a new look that is spreading rapidly all over the United States.

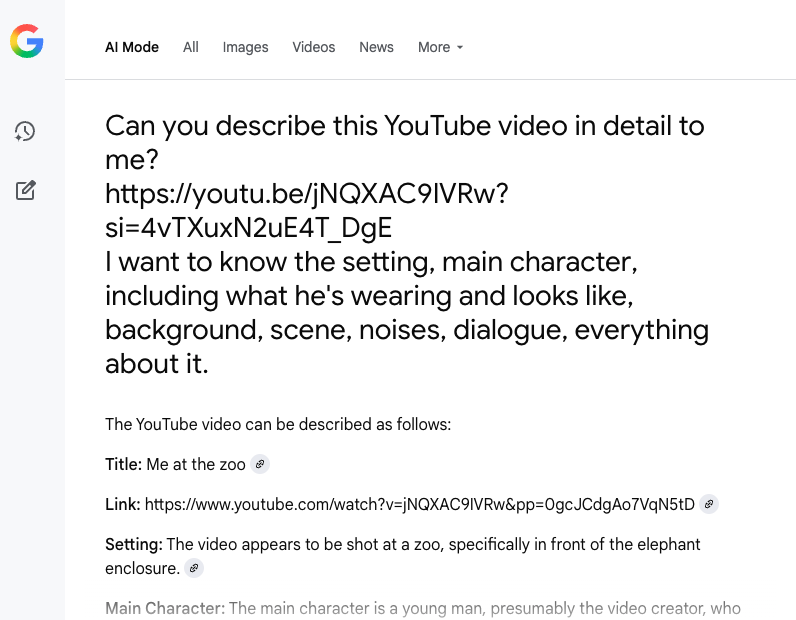

Here is the signal of entering Google’s AI format:

Google AI mode almost immediately returned with a detailed explanation, which I took and dropped the Gemini VEO in the 3 prompt field.

I made some modifications, mostly “video appears …” and finally remove sentences like final analysis, but otherwise, I left most of it and immediately added it.

“Let’s make a video based on these details. Output should be 4: 3 ratio and it looks as if it was shot at an 8 mm video tape.”

It took a little time to make the video (I think the service is now being hampered), and, because it produces 8 seconds of pieces at a time, it was incomplete, which eliminated the middle sentence of dialogue.

Still, the result is impressive. I would not say that the main character looks like cream. Fairly, does not indicate, for example, cream haircut, the shape of his face, or his deep eyes. Google’s AI format details about its clothing were also insufficient. I am sure that if I had played a screenshot of the original video, he would have done a better job.

Note yourself: You can never provide such detail in the productive indicator.

8 seconds at a time

VEO 3 video zoo is much better than a cream tour, and the elephants are far away, though they are in motion there.

View 3 found the film standard, which gave it a good 2005, but not a 4: 3 aspect ratio. It also included archaeological and unnecessary labels at the top, thankful that they disappear quickly. I realize that now I should have removed the “title” bit from my gesture.

Audio is especially good. The dialogue is well compatible with my main character and, if you hear closely, you will also hear the noise of the background.

The biggest problem is that it was only half of YouTube short video. I wanted a complete entertainment, so I decided to go back with very little indicators:

Continue with the same video and looking at this dialogue, looking at the elephants and then looking at the camera:

“Friends and it’s great.” “And all that has to say.”

View 3 complied with the sequence and the main character but lost some plot, which dropped the old school’s old school video of the previously manufactured clip. This means that when I offer them together (as I do up), we lose considerable continuity. This is like a film staff’s time jump, where they suddenly found a very better camera.

I am also a little disappointed that all my VEO 3 videos have irrational captions. I need to remember to remove, hide, or keep out of the video frame from View 3.

I think about how difficult it was to film, edit and upload this first short video for cream and how I made the same clip basically without the need for people, lighting, microphone, camera or elephants. I didn’t even have to transfer the footage from the tape or from the iPhone. I just dropped it out of an algorithm. Our friends, we have really stepped into the glasses.

I learned another thing through this project. As a Google AI Pro Member, I have two View 3 video breeds Per day. This means that I can do it again tomorrow. Tell me what you want to make me.