https://www.youtube.com/watch?v=c8fk6l1kvxq

In this project walkthrough, we will find how to work effectively with major datases by analyzing the startup investment data from the Crunchbase. By improving the use of memory and taking advantage of SQ Late, we will expose insights about fundraising trends while learning learning techniques that increase real -world data challenges.

Working with major datases in data analysis is a common challenge. When your datastate exceeds your computer’s memory capacity, you need a smart strategy to process data and effectively analyze the data. This project demonstrates practical techniques to handle large datases from medium, which should be known to every data professional.

During this lesson, we will work within the self -made obstruction of 10MB memory. By the end, you have converted 57MB datasate into a better database ready for a speedy analysis of electricity.

Will you learn

By the end of this tutorial, you will know how:

- Perks large CSV files into pieces to avoid memory errors

- Identify and remove unnecessary columns to reduce the size of the dataset

- Change data types to more memory to an efficient alternative

- Load SQUPTED Data of Effective Question In SQ Late

- Combine Pandas and SQL for Powerful Data Analysis Work Floose

- Diagnose encoding issues and handle non-UTF-8 files

- Create concepts from overall database questions

Before you start: Pre -instruction

To take the maximum of this project, follow these initial steps:

Review the project

Access the project and familiarize yourself with goals and structures: Analyzing a Startup Fund Rising Deal project.

Access the solution notebook

You can see it here and download to see what we will cover: Solution notebook

Prepare your environment

- If you are using the Data Quest platform, everything has already been configured for you

- If working locally, make sure you have Pandas and SQLite3 installed

- Download from dataset Got hub (October 2013 Crunchbase Dat)

Provisions

- Comfortable with the main things of Azigar (loops, dictionaries, data types)

- Basic acquaintance with Pandas data frames

- Some SQL knowledge is helpful but it is not required

New in Mark Dowan? We recommend learning the basics to format the header and adding contexts to your Jipter Notebook: Mark Down Guide.

To set your environment

Let’s start by importing pandas and understanding the challenge:

import pandas as pd

Before we start working with our datastas, let’s understand our barrier. We are imitating a scenario where we have only 10MB memory available for our analysis. Although this specific limit is artificial for teaching purposes, the techniques we learn apply directly to real -world conditions with millions of rows.

Learning Insight: When I first started a data analyst, I tried to load 100,000 row datasets on my local machine and it crashed. I was blind because I didn’t know about the technique of correction. Understanding what causes great memory use and how to handle it is valuable skills that will save you numerous hours of disappointment.

Dataset loading with chunking

Our first challenge: CSV file is not UTF-8 encoded. Let’s handle it correctly:

# This won't work - let's see the error

df = pd.read_csv('crunchbase-investments.csv')

When you try it, you’ll get a UnicodeDecodeError. This is our first lesson to work with real -world data: it is often dirty!

# Let's use chunking with proper encoding

chunk_iter = pd.read_csv('crunchbase-investments.csv',

chunksize=5000,

encoding='Latin-1')

Learning insights: When UTF-8 fails, Latin 1 is a good fallback encoding. Think about encoding like languages-if your computer expects English (UTF-8) but becomes Spanish (Latin 1), it needs to know how to translate. The encoding parameter is only telling Pandas which “language” can be expected.

Now let us know how big our dataset is:

cb_length = ()

# Count rows across all chunks

for chunk in chunk_iter:

cb_length.append(len(chunk))

total_rows = sum(cb_length)

print(f"Total rows in dataset: {total_rows}")

Total rows in dataset: 52870

To indicate a correction opportunities

To find lost values

There are important candidates for removing many lost values columns:

# Re-initialize the chunk iterator (it's exhausted after one pass)

chunk_iter = pd.read_csv('crunchbase-investments.csv',

chunksize=5000,

encoding='Latin-1')

mv_list = ()

for chunk in chunk_iter:

if not mv_list: # First chunk - get column names

columns = chunk.columns

print("Columns in dataset:")

print(columns.sort_values())

mv_list.append(chunk.isnull().sum())

# Combine missing value counts across all chunks

combined_mv_vc = pd.concat(mv_list)

unique_combined_mv_vc = combined_mv_vc.groupby(combined_mv_vc.index).sum()

print("\nMissing values by column:")

print(unique_combined_mv_vc.sort_values(ascending=False))

Columns in dataset:

Index(('company_category_code', 'company_city', 'company_country_code',

'company_name', 'company_permalink', 'company_region',

'company_state_code', 'funded_at', 'funded_month', 'funded_quarter',

'funded_year', 'funding_round_type', 'investor_category_code',

'investor_city', 'investor_country_code', 'investor_name',

'investor_permalink', 'investor_region', 'investor_state_code',

'raised_amount_usd'),

dtype='object')

Missing values by column:

investor_category_code 50427

investor_state_code 16809

investor_city 12480

investor_country_code 12001

raised_amount_usd 3599

...

The vision of learning: gave

investor_category_codeThe column has 50,427 lost values out of 52,870 total row. It’s more than 95 % missing! This column provides practically no value and is a great candidate to remove it. When a column disappears more than 75 % of its values, it is usually safe to leave it completely.

To analyze the use of memory

Let’s see which columns are eating most memory:

chunk_iter = pd.read_csv('crunchbase-investments.csv',

chunksize=5000,

encoding='Latin-1')

counter = 0

series_memory_fp = pd.Series(dtype='float64')

for chunk in chunk_iter:

if counter == 0:

series_memory_fp = chunk.memory_usage(deep=True)

else:

series_memory_fp += chunk.memory_usage(deep=True)

counter += 1

# Drop the index memory calculation

series_memory_fp_before = series_memory_fp.drop('Index').sort_values()

print("Memory usage by column (bytes):")

print(series_memory_fp_before)

# Total memory in megabytes

total_memory_mb = series_memory_fp_before.sum() / (1024 * 1024)

print(f"\nTotal memory usage: {total_memory_mb:.2f} MB")

Memory usage by column (bytes):

funded_year 422960

raised_amount_usd 422960

investor_category_code 622424

...

investor_permalink 4980548

dtype: int64

Total memory usage: 56.99 MB

Our dataset uses about 57 MB, which is much more than our 10MB barrier! The Permanent Columns (URL) are the biggest memory hogs, which they make a great candidate to remove our data frame.

Implementing correction strategies

Leaving unnecessary columns

Based on our analysis, let’s remove the columns that will not help our analysis:

# Columns to drop

drop_cols = ('investor_permalink', # URL - not needed for analysis

'company_permalink', # URL - not needed for analysis

'investor_category_code', # 95% missing values

'funded_month', # Redundant - we have funded_at

'funded_quarter', # Redundant - we have funded_at

'funded_year') # Redundant - we have funded_at

# Get columns to keep

chunk = pd.read_csv('crunchbase-investments.csv', nrows=1, encoding='Latin-1')

keep_cols = chunk.columns.drop(drop_cols)

print("Columns we're keeping:")

print(keep_cols.tolist())

Learning insights: Notice how we are just falling

investor_category_codeIn missing data columns. Thoughinvestor_cityFor, for, for,.investor_state_codeAndinvestor_country_codeAlso contains lost values, they did not exceed 75 % and were sustained for future potential insights.

Also, we are leaving the month, quarter and year columns even though they have data. It follows the principle of programming “Repeat yourself” (dry). Since we have the whole date funded_atIf needed, we can remove a month, quarter or year after. This saves us with important memory without losing information.

To indicate data type correction opportunities

Let’s check which columns can turn into more efficient data types:

# Analyze data types across chunks

col_types = {}

chunk_iter = pd.read_csv('crunchbase-investments.csv',

chunksize=5000,

encoding='Latin-1',

usecols=keep_cols)

for chunk in chunk_iter:

for col in chunk.columns:

if col not in col_types:

col_types(col) = (str(chunk.dtypes(col)))

else:

col_types(col).append(str(chunk.dtypes(col)))

# Get unique types per column

uniq_col_types = {}

for k, v in col_types.items():

uniq_col_types(k) = set(col_types(k))

print("Data types by column:")

for col, types in uniq_col_types.items():

print(f"{col}: {types}")

Let’s now check the unique price count of clear candidates:

unique_values = {}

chunk_iter = pd.read_csv('crunchbase-investments.csv',

chunksize=5000,

encoding='Latin-1',

usecols=keep_cols)

for chunk in chunk_iter:

for col in chunk.columns:

if col not in unique_values:

unique_values(col) = set()

unique_values(col).update(chunk(col).unique())

print("Unique value counts:")

for col, unique_vals in unique_values.items():

print(f"{col}: {len(unique_vals)} unique values")

Unique value counts:

company_name: 11574 unique values

company_category_code: 44 unique values

company_country_code: 3 unique values

company_state_code: 51 unique values

...

funding_round_type: 10 unique values

Learning Insight: Any column with less than 100 unique values is a good candidate for this

categoryData types are incredibly efficiently effective for repeated string values. Think about it like a dictionary – instead of storing “USA” 10,000 times, Pandas once stores it and uses a reference number.

Applied for corrections

Let us load our data with all the improvements:

Note: We’re changing funded_at Using a Date Time Data Type pd.read_csv Optional parameter parse_dates

# Define categorical columns

col_types = {

'company_category_code': 'category',

'funding_round_type': 'category',

'investor_state_code': 'category',

'investor_country_code': 'category'

}

# Load with optimizations

chunk_iter = pd.read_csv('crunchbase-investments.csv',

chunksize=5000,

encoding='Latin-1',

usecols=keep_cols,

dtype=col_types,

parse_dates=('funded_at'))

# Calculate new memory usage

counter = 0

series_memory_fp_after = pd.Series(dtype='float64')

for chunk in chunk_iter:

if counter == 0:

series_memory_fp_after = chunk.memory_usage(deep=True)

else:

series_memory_fp_after += chunk.memory_usage(deep=True)

counter += 1

series_memory_fp_after = series_memory_fp_after.drop('Index')

total_memory_after = series_memory_fp_after.sum() / (1024 * 1024)

print(f"Memory usage before optimization: 56.99 MB")

print(f"Memory usage after optimization: {total_memory_after:.2f} MB")

print(f"Reduction: {((56.99 - total_memory_after) / 56.99 * 100):.1f}%")

Memory usage before optimization: 56.99 MB

Memory usage after optimization: 26.84 MB

Reduction: 52.9%

We have reduced our memory use more than half!

Loading in SQLITE

Now Load your better data to SQ Elite Database of Effective Investment:

import sqlite3

# Create database connection

conn = sqlite3.connect('crunchbase.db')

# Load data in chunks and insert into database

chunk_iter = pd.read_csv('crunchbase-investments.csv',

chunksize=5000,

encoding='Latin-1',

usecols=keep_cols,

dtype=col_types,

parse_dates=('funded_at'))

for chunk in chunk_iter:

chunk.to_sql("investments", conn, if_exists='append', index=False)

# Verify the table was created

cursor = conn.cursor()

cursor.execute("SELECT name FROM sqlite_master WHERE type='table';")

tables = cursor.fetchall()

print(f"Tables in database: {(t(0) for t in tables)}")

# Preview the data

cursor.execute("SELECT * FROM investments LIMIT 5;")

print("\nFirst 5 rows:")

for row in cursor.fetchall():

print(row)

Learning Insight: SQ Elite is excellent for this use issue because once our data comes into the database, we do not have to worry about memory barriers. SQL questions are incredibly fast and efficient, especially for collective operations. Complex pandas operation with conductivity will be needed in the same SQL inquiry.

To analyze data

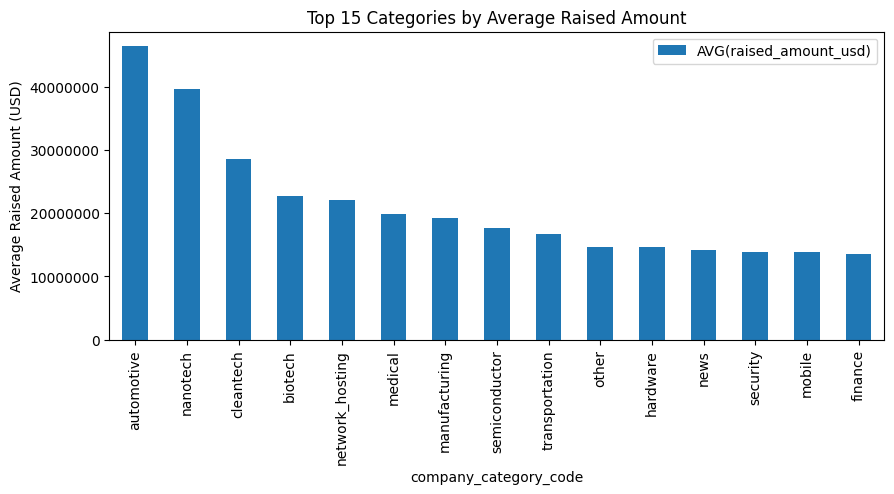

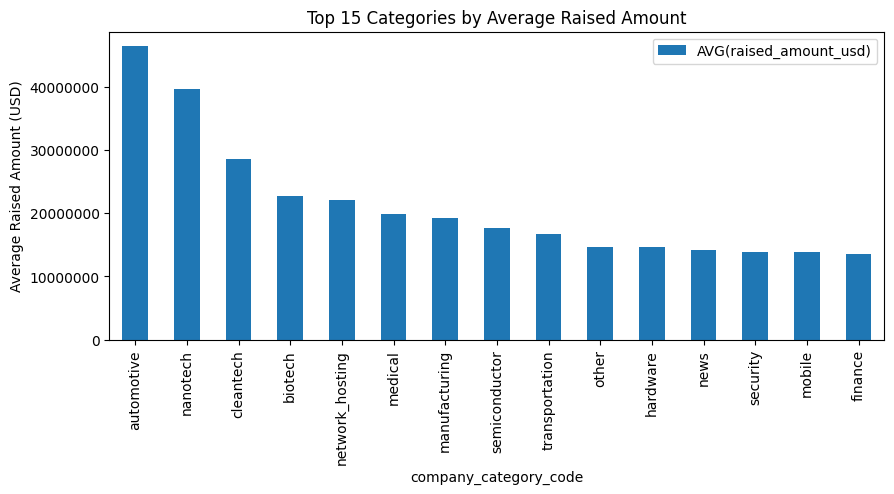

Let’s answer a business question: Which types of companies on average attract the most investment?

# Use pandas to read SQL results directly into a DataFrame

query = """

SELECT company_category_code,

AVG(raised_amount_usd) as avg_raised

FROM investments

WHERE raised_amount_usd IS NOT NULL -- Exclude nulls for accurate average

GROUP BY company_category_code

ORDER BY avg_raised DESC;

"""

df_results = pd.read_sql(query, conn)

# Visualize top 15 categories

top_n = 15

ax = df_results.head(top_n).set_index('company_category_code').plot(

kind='bar',

figsize=(10, 6),

title=f'Top {top_n} Categories by Average Investment Amount',

ylabel='Average Investment (USD)',

legend=False

)

# Format y-axis to show actual numbers instead of scientific notation

ax.ticklabel_format(style='plain', axis='y')

plt.tight_layout()

plt.show()

Key path and next steps

Through this project, we have learned the necessary techniques to handle major datases:

- Chinish prevents memory errors: Process the data in managed pieces when more than fully dataset memory

- Data type correction matters: Changing into category and appropriate digit types can reduce memory by 50 % or more

- Remove helpless columns: Do not store the same information in multiple ways

- SQLITE enables fast analysis: Once better and loaded, complex questions run in seconds

- Encoding issues are normal: Real-world data is often not UTF-8. Learn your alternatives

Homework challenges

To deepen your understanding, try these extensions:

- Further correction: Can you change?

raised_amount_usdFloat 32 or smaller than float 64? Test if it is properly related to proper health. - Modern questions of analysis:

- How much proportion of total funding to 10 % companies of companies?

- Which investors overall participated in the highest amount of money?

- Which funding rounds (badges, series A, etc.) are the most common?

- Are there weather samples in funding? (Indicated: use

funded_atColumn)

- The quality of the data: Investigate why the original CSV UTF-8 encod is not. Can you find and fix the hassle characters?

Personal Reflection: As someone who moved from mathematics to data analysis education, I remember memory problems and encoding errors. But these challenges taught me that the real world data is dirty, and learning to handle the mess is what makes good analysts separate from the great. The correction you learn every technique enables you to deal with big, more interesting datases.

This project shows that with the right techniques, you can analyze datases more than your available memory. Whether you are working with startup data, customer records, or sensor readings, this correction strategy will present you well throughout your data career.

If you are new to pandas and you have been challenged difficult concepts, start our processing in the Pandas Course with major datasters. See the path of our data engineering, our data engineering way to make a deep dive into data engineering.

Happy Analysis!